Powerful Python Bootcamp

Learn World-Class Python Software Engineering Skills In Just 5 Hours Per Week.

- Time-Efficient Learning Path For Advanced Python, Designed For Busy Professionals

- Create Your Open-Source Project To Showcase Your Expertise

- Promote Your Professional Online Brand

Our Alumni Work At These Companies

Watch Our Thesis Video:

Creating Your Artifact

Your Artifact Is A Dynamic Asset Which Attracts The Career Opportunities You Want.

It is a portfolio project carefully designed to:

- Attract exciting job opportunities,

- Build rewarding professional relationships, and ultimately

- Manifest the career you have always wanted.

Most portfolio projects do NOT accomplish these goals.

Powerful Python Bootcamp helps you create an Artifact which effectively accelerates your success.

Read more about creating your Artifact in the Guide.

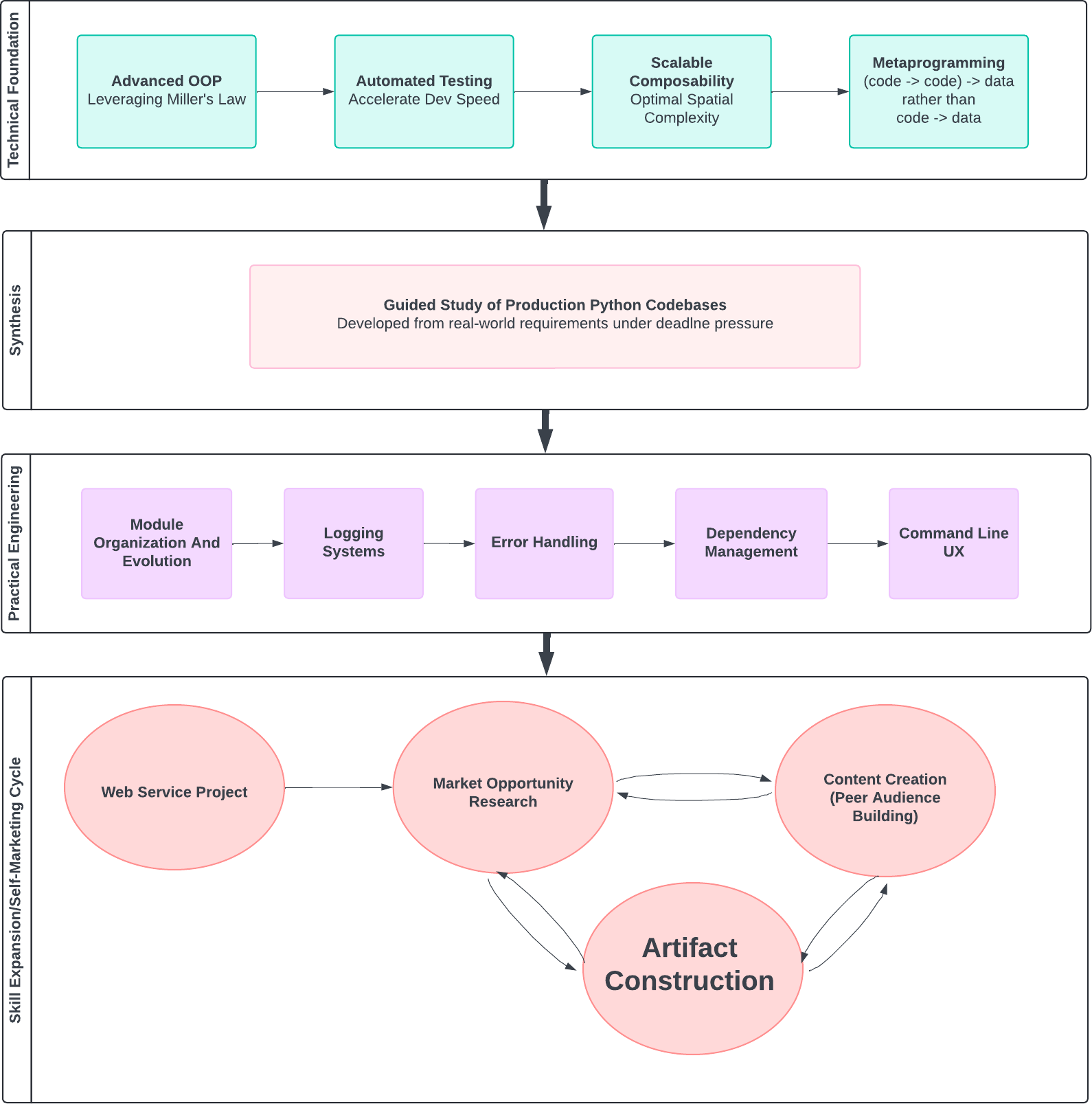

Technical Track

A Time-Efficient Curriculum Of Intermediate To Advanced Python Training. Skipping The Basics You Already Know.

Powerful Python’s curriculum started from the instructor, Aaron Maxwell, teaching advanced Python to 10,000 technology professionals worldwide, in partnership with O'Reilly Media...

With students from nearly every engineering domain, culture, and country.

This creates the most well-tested pro Python curriculum ever taught... and arguably the best for ANY programming language in history.

It is unlikely any other training will ever surpass its quality, rigor, or track record for results.

Group Mentoring

Get Your Questions Answered And Receive Expert Guidance From Experienced Pythonista Coaches.

These sessions are recorded. If you cannot attend live, just submit your questions before and they will be answered on the recording for you.

The best part of group mentoring is that you learn from other students’ questions.

Bootcamp students are skilled technology professionals with high career ambitions, who consistently asking AMAZING questions you never would have thought to ask on your own.

This makes the group mentoring sessions a priceless learning experience for everyone. Plus it’s a lot of fun!

Boost Your Income

The Bootcamp Is Designed To Accelerate Your Career Progress And Explode Your Earning Potential.

You accomplish this by:

- Building your technical skill base,

- Elevating your professional reputation, and

- Learning to promote yourself as a sought-after thought leader.

Powerful Python Bootcamp helps you do all this, and more.

Extensive Group Mentoring Archive

The Most Extensive Repository Of Realistic And Advanced Discourse For Python Professionals In The World.

Filled with priceless insights and live coding demonstrations, for a wide range of practical, real-world topics in Python, software engineering, data science, and much more.

Topics include:

- Advanced uses of super()

- A live troubleshooting session, figuring out a bug in a lab under Pytest

- Optional arguments in dataclasses

- Spilling some secrets from the Coder Dream Job course

- The three ways to use type annotations

- Aaron's opinions on Javascript

- Reducing operations in Pyspark

- The decorator pattern that should be ILLEGAL

- Peering under the hood of Powerful Python's marketing automation code

- The C3 Linearization algorithm

- How to inspect the source of your imported libraries

- Why we never use f-strings in logging

- Module organization

- Is Python high level or low level? How does it compare to other languages? What does ‘high level' even mean? And what does one of the most famous Python libraries of all time have to teach us about this?

- Sentinel values, and how they relate to None, pandas.NA, and np.nan

- Higher-order function tricks

- The @functools.cache decorator, and how it relates to the memoization labs in Higher-Order Python

- The @functools.cached_property decorator (which is totally and completely different)

- When to use notebooks, and when to avoid them

- Walking through the codebase of a Django web application I recently cooked up, demonstrating many advanced techniques from Higher-Order Python and other Powerful Python courses.. A real-world production application with a STACK of money on the line

- Exploratory mutations of the webviews.py lab

- Core concepts in the Twisted framework

- Levels of technological abstraction, and how understanding lower levels affects the effectiveness with higher levels

- How to learn a new programming language that's different from what you are familiar with

- A detailed walkthrough of how to do the labs in the most systematic way, and working with mocks in unittest.

- Deep dive into the webframework.py lab from Higher-Order Python

- How to handle it when you start to become better than your peers

- How IDEs can get in the way of your learning (and how to deal with it)

- Testing functions that are inherently difficult to test

- Bash wrappers for Python programs

- The evolution of built-in exceptions from Python 2 to Python 3, and the lessons that evolution has for our coding today

- Higher-level strategies of automated testing

- Subclassing built-in types, and why str is different there

- Unconventional decorator patterns

- How to read PEPs

- Deferred Reference

- The security risks and other hazards of eval()

- The essence of super()

- Revealing the source of a script I wrote to generate the HTML version of the Powerful Python book

- Strategy for navigating multiple layers of abstractions

- A small distributed Pythonic app to get around a corporate firewall

- Details of the iterator protocol (and how generator objects fit into that)

- Understanding the Liskov Substitution Principle, and how it relates to Python's OOP syntax

- How to think about mocks

- Covering many advanced details of working with web services and the HTTP protocol

- Complexity trade-offs for code calling APIs

- Talking through a challenging network-engineering issue with strong security considerations

- Adding exception context in the "catch and re-raise" pattern

- Why the wait_for_chrome_download() method of DownloadDir (from Code Walkthroughs) is like a state machine

- The three pillars of concurrency in Python

- How @functools.cached_property relates to the memoize.py lab in Higher-Order Python

- Object lifecycle in Python, and the __del__() magic method

- A partial code walkthrough of the metaleads.py program, including comments on environment variables

- Prompt engineering troubleshooting

- Many fascinating details of generators and decorators

- A non-Python database design question

- Learning more advanced Git

- Deep distinctions on Python's generator model

- Is the GIL finally going away? And why it is important for Python concurrency. How threading, asyncio, and multiprocessing compare now... and how PEP 703 will change that.

- Looking at a multithreaded production codebase

- how object identity is defined in Python's language model

- My blasphemous opinion on Linus Torvald's greatest achievement

- Scoping rules around nonlocal variables

- A broad survey of the different collection types in Python, beyond lists and dicts

- The different roles of type annotations

- System paths vs Python paths vs other paths

- Debugging diabolical decorators

- The concept of "cognitive cost" when coding

- Method Resolution Order

- Deeper understanding of lambdas (anonymous functions), including how Python does them differently than other languages

- Choosing good inheritance hierarchies

- Book recommendations for Python pros

- The right way to use Stack Overflow (and how to avoid mis-using it)

- Generator objects/comprehensions/functions

- Troubleshooting a bug so subtle, that we had to dig elbows-deep into the Pandas source code to crack it

- Peering into the Asciidoc source of the Powerful Python slide decks (the same format used by O'Reilly for its books)

- How instance, static and class methods differ in Python, and how that's all different from how it works in languages like Java

- Defensive asserts

- Talking about defaultdict... not just how to use it, but going deep into WHY it was designed the way it was, and the lessons it has for your own code

- Multithreaded/multiprocessor programming, and the best book for learning it deeply

- Diving deep into exception patterns

- Troubleshooting a high-throughput messaging architecture problem

- Many insights about automated testing

- What to do when you are facing an overwhelmingly complex coding problem

- A demonstration of expanding the test suite with more refined requirements, and how that affects the evolution of the application

- Strategies for cramming for a FAANG interview

- Classmethod vs. staticmethod

- Should you be worried about being replaced by a bot? In fact, many Python developers SHOULD be worried, but a small fraction will come out of this as big winners. Today's session tells you how to be one of them

- Generator algorithms

- The "walrus operator", formally called "assignment expressions" (PEP 572)

- Generator-based coroutines

- The intersection of module organization + dependency management

- Inter-Python-process communication

- How Github Copilot, ChatGPT, and other AI tools coming in the future affect your career

- A stateful low-level parsing algorithm

- Pyspark

- The why and how of functools.wraps()

- How to catch exceptions in Twisted

How Do I Know The Bootcamp Is Right For Me?

If You Write Python Code, The Bootcamp Will Quantum Leap Your Entire Future Career.

The best way to know: do the sample coding exercises, which include videos from the curriculum and the all-important "labs".

Questions & Answers

Three things:

- A focus on first principles to boost your creativity and innovative ability

- Leveraging cognitive psychology to accelerate your learning and performance

- An uncompromising focus on engineering excellence.

If you can write simple Python programs using functions, dicts and lists, and run them on the command line, you qualify. See the sample coding exercises also.

We recommend you invest at least 5 hours per week, and attend one group mentoring session per week. This is designed for busy professionals, and will fit in your schedule.

Those who invest 5-10 hours per week typically complete the main technical track in 4-7 weeks. The remaining material is more flexible, and you can invest additional time at that stage to reap greater benefits, if you like.

No problem. You can submit your question before the session, and we will answer it in detail during the call. After you watch the recording, if you have follow-up questions, just ask and we'll help you sort it out.

Yes, many. Read student reviews on Trustpilot, or watch student review videos here.

Certainly, right here.

This is designed for all Python-using technology professionals, who work in wide and diverse ways. As such, we fully support every IDE and editor, and the three major OSes (MacOS, Windows, and Linux).

Email us at service@powerfulpython.com and we will respond as quickly as possible.

Our Professional Students Work At These Companies

What Our Alumni Say

-

Juan Arambula, Software Engineer

I tried other premium Python content, and they didn't go deep enough. I found they pretty much repeated each other. And when I tried Powerful Python, it was quite different. I was able to learn in a matter of weeks all I needed to learn about Python to use it proficiently, something that I feel would have taken me months to learn on my own. So I really recommend it to everybody.

I tried other premium Python content, and they didn't go deep enough. I found they pretty much repeated each other. And when I tried Powerful Python, it was quite different. I was able to learn in a matter of weeks all I needed to learn about Python to use it proficiently, something that I feel would have taken me months to learn on my own. So I really recommend it to everybody.

-

Jeffrey Smart, Risk Manager & Software Engineer

I just had a round of interviews for a software engineering position, and I'd say about half of the programming examples were all but taken directly from Powerful Python materials. So when I would see these questions I really could hit the ground running.

I just had a round of interviews for a software engineering position, and I'd say about half of the programming examples were all but taken directly from Powerful Python materials. So when I would see these questions I really could hit the ground running.

-

Erik Engstrom, Embedded Systems Developer

Powerful Python's first principle approach was the primary thing that really attracted me to it. The idea of mastering is something that was very attractive and something that I wanted. And so it's been very valuable. And I would highly recommend it to anyone else. I really encourage you to consider it.

Powerful Python's first principle approach was the primary thing that really attracted me to it. The idea of mastering is something that was very attractive and something that I wanted. And so it's been very valuable. And I would highly recommend it to anyone else. I really encourage you to consider it.

-

Anish Sharma, Software Architect

I always felt that I lacked a solid understanding of how Python worked and how I can work with it better... At the end of the day, [joining Powerful Python] is probably the best decision you have ever made. It's probably one of those secrets that most people don't share, but it's out now.

I always felt that I lacked a solid understanding of how Python worked and how I can work with it better... At the end of the day, [joining Powerful Python] is probably the best decision you have ever made. It's probably one of those secrets that most people don't share, but it's out now.

-

Best programming course I've Found. Period. For developing tangible advanced skills and best practices to be employed in all facets of development, it was worth every nickel and I'd buy it again in a heartbeat.

Chad Curkendall, Civil Engineering

-

Adrián Marcelo Pardini, Senior Software Engineer

You haven't seen this kind of dialogue or one-on-one questions with the teachers in other courses. It was something very good for me. I hope to see you here!

-

Ping Wu, Cloud Engineering & FinOps

I wanted to using Python for reusable code with clarity, so that the "future me" can benefit from it. I feel like a bunch of doors have been opened for me to conquer for the next level.

I wanted to using Python for reusable code with clarity, so that the "future me" can benefit from it. I feel like a bunch of doors have been opened for me to conquer for the next level.

-

Rahul Mathur, Test Automation Engineer

It's not the language that is important. It's your thinking and how that thinking applies to the language. Powerful Python permanently embeds that into your brain, and that helps a lot for working professionals.

-

Gary Delp, System Engineering and Integration

I've tried using Stack Exchange and textbooks and that sort of thing, and that's sort of piecemeal. With Powerful Python, I start from first principles. I commend it to your attention and suggest that if you're serious about doing coding, you'll really benefit from it. Cheers!

I've tried using Stack Exchange and textbooks and that sort of thing, and that's sort of piecemeal. With Powerful Python, I start from first principles. I commend it to your attention and suggest that if you're serious about doing coding, you'll really benefit from it. Cheers!

Written Testimonials

-

I'm probably Powerful Python Bootcamp's biggest fan right now. I just finished telling my family how I've never learned so much in such a short time as I have through your program.

Initially, I was unsure about joining, but after starting the Bootcamp, I'm certain it was exactly what I needed. It was the right decision, and I'm truly your biggest advocate."Travis Lane, Data Engineer

-

Powerful Python really is the best way I've found to level up my Python skills beyond where they were already.

The course material demystifies things like testing and mocking, test-driven development, decorators and other things in simple but straightforward ways. Individually, the courses in the boot camp are priceless references, but I also found large benefit from the Slack and Zoom sessions.

You know, I've never been afraid to dig into the Python libraries that I'm using, find out how they work and what they're doing. But I've noticed that since completing the course, I'm able to dig a lot deeper and understand a lot more.

And not only that, but it's also extended to my own coding, where I can see a lot more of the project ahead of time before I'm even writing one line of code. How things should fit together and how to make my code more maintainable, more testable.

It's really been a great way to level up my Python, and I would certainly recommend Powerful Python to anyone at any level to help increase their Python skills.Marc Ritterbusch

-

This is an absolute master class in Python. You will simply not find another Python class that presents such advanced material in such an easy-to-grasp manner. The videos lectures are top notch, and the exercises do a phenomenal job of reinforcing the concepts taught. As a software architect with 20+ years of experience, I can tell you with absolute certainty that if you have a foundation in Python and are looking for one class to take your game to the next level, this is the class you should take.Eric Kramer, Boston, MA, USA

-

Highly recommended!

Thanks Powerful Python, I figured how to learn a complex programming language like Python from scratch. I developed enough courage to not get scared to open any of the source code of the Python modules other developers built, because now i am curious to understand and learn the patterns they used.

I also learned the importance of test driven development and am building my team at work and constantly pushing boundaries of my QA team to embrace the concept of test driven development. It's hard as hell, but immensely satisfying.

In the process of learning all the courses in Powerful Python I developed a mental capacity to imagine matured object oriented models regardless of the language (Python or Java) and I am able to easily abstract technical complexities and converse with functional and non tech savvy people in a manner they understand and grasp stuff easily.Santosh Kumar

-

I really enjoyed Powerful Python. The course is designed to take you from the beginner level to an intermediate and even advanced level in 3 months. Of course, the student must put the necessary effort to achieve such goals. I had some Python foundation before taking this course. However, Powerful Python taught me a lot more by diving deep into classes, test driven developments, generators, dependency management and especially guiding the programmer to write a complex program from scratch.Kiswendsida Abdel Nasser Tapso

-

This collection of Python courses are great! They skip the repetitive beginner stuff that every Python book has, and gets to the point quickly. He teaches you at a professional level, but it is still a very clear and understandable level. You will level up fast if you put in the work.

Powerful Python uses a fantastic "labs" format to get you to really practice and learn the content. He writes unit tests, and you write the code to make the tests pass. It's extremely effective and enjoyable.

I've learned a ton of production-worthy techniques. For example, the average Python tutorial will teach you how to read and use decorators. Powerful Python will get you to understand them, be able to write them, and then bend them to your absolute will to do amazing things!

Their coverage of generators has also transformed the way I work with geometry and graphics in Python.Chris Lesage, Montreal, Quebec Canada

-

A life changer.

I always wanted to know how Python developers were able to write such amazing and extensive libraries, but I couldn't find the right fit. Most material out there is either too broad (books) or too narrow (blog post). PP offered an opinionated approach to software development in Python, with lots of exercises and direct feedback from the Powerful Python instructor. In this case, opinionated is an excellent approach as it guides you to learn what matters to get your job done, without worrying about obscure methods or practices that are almost never used.

PP moved me from Python enthusiast to Pythonista!Rafael Pinto

-

The course for intermediate Python users. It's the exact course I needed to go beyond the basics of Python.

Material is presented in a digestible manner and the exercises help to solidify your understanding.George McIntire

-

Comprehensive, deep and highly structured. The Powerful Python (book and Bootcamp) is, as the name suggests, a powerful enabler for anyone seeking to 10x their Python development skills in a short time.

Given the plethora of courses on the subject, it might be tempting to improve skills incrementally by wading through blog posts, tutorials, and StackOverflow queries. These resources in fact are repetitive, unstructured, and can be overwhelming.

If you want to make fast progress towards your goals, have a systematic understanding of the language, and avoid wasting time by re-learning low-level concepts across various materials, Powerful Python is the surefire way to go. Soon you will find that you are incorporating advanced Python features and software engineering concepts in your own codebase.Asif Zubair

-

Take your Python skills to the next level. Powerful Python is a well structured approach to tackling intermediate and advanced Python and programming topics. I have gained a better grasp of object orient programming and approaches for implementing test driven development. The exercises and labs provide practical examples and allow you to observe your Python skills "leveling up" as you successfully complete each assignment. I highly recommend this course for anyone seeking to take their Python skills to the next level.Matt Geiser

-

NOT your typical boring 'Beginner' content - Powerful Python will take you to the next level.

If you're in that stage of your Python journey where you're beyond the ‘basics' and looking to take it to the next level, this is course for you. A while back I found myself in a rut where I wasn't making the leaps I was during the beginning of my Python quest. Luckily I decided to purchase the book ‘Powerful Python.' It was the best decision I could have made at the time. Within just a few weeks, I started incorporating advanced features into my code, in particular, decorators.

When it comes to decorators, there's some basic tutorials out there that can help but unlike those other tutorials, the Powerful Python book was able to explain them in such great detail and allow me to understand not only the ‘how', but just as importantly, the ‘why.' Furthermore, the examples he provides are not the typical unrealistic kinds I'd find in so many other tutorials; his examples helped solidify in my brain the purpose and true power of decorators. The main point is – I started writing my own decorators soon after that were able to solve several obstacles I was facing. Actions speak louder than words and the fact that I was able to utilize this feature after reading his book should speak volumes.

The other great decision I made was join Powerful Python. This is an extensive course which goes over many of the topics that never get mentioned in other tutorials such as test driven development and logging. I had never written a single test before joining PP! What's more scary is until joining the Powerful Python, I did not even comprehend or appreciate the concept of writing tests for my code. Powerful Python provides the content in such a way that you truly appreciate the ‘why' while still learning the ‘how.'

One of the best features are the workshop labs. This is where your brain actually begins to grasp the topic presented. The labs are set up in a way that allows you to truly test your understanding of the various concepts presented in the course. Furthermore, many of the lab solutions (which are provided) include powerful and downright impressive algorithms. I would spend hours simply re-typing the algorithms used in the solutions in order to make myself become a better programmer.

Long story short – there are unlimited amounts of Python “beginner” courses and tutorials out there. However, there is a huge shortage in “intermediate/advanced” content. If you are one of those people like me that gets bored quickly and needs that new challenge to take things to the next level, then Powerful Python is the perfect choice.Liam

-

A great resource for taking your Python skills to the next level.

I've only been able to work through the Pythonic Object-Oriented Programming course so far, but I love the video lessons, explanations and maybe, most of all, the exercises and challenges at the end of the lessons in the form of scenarios and tests. It's an absolutely brilliant idea and approach. I loved every minute I spent working through them. It gave me bite-sized, realistic and fun challenges to apply what I'd just learned.

It's changed the way I think about classes, objects and OO and how I can use them and apply it to my own code. And improve old code I've already written when I revisit it.

I'm looking forward to working through the additional courses and topics. I know the other courses are structured the same way and aimed at intermediate to advanced Python skills and what I need to understand about them to make sure I grasp the important Python concepts correctly... so I can move myself and my skills to the next level.Nathan J

-

Powerful Python is really power to you. The instructors explain Python so that it becomes second nature for you. Everything can be applied immediately and the concepts are explained thoroughly. The support is amazing and the PP instructors are always there to help.

The contents are broad and they are taken apart to be digested and put them back later together in a seamless way through the labs, which are a great resource for anyone wanting to apply the knowledge.

I have put TDD to work since the moment I learned it and it has made me realize how to construct software, so in essence, it has helped me a nonprogrammer, start thinking like one.

I totally recommend anyone reading this to take this opportunity and become fluent in Python once and for all.Juan José Expósito González

Pythonic OOP

-

This class is an excellent introduction to the theory and practice of object oriented programming. You will learn how these concepts can be quickly and cleanly implemented in Python. The lectures and labs are clear and to the point - EXCELLENT instructor.Mike Clapper, Oklahoma, USA

-

I'm going to use what I have learned from this course right away. I have a current application and an older one that I have been nursing through my entire Python life that I will remake and refactor.Tipton Cole, Austin, Texas, USA

-

I liked the course very much. Expect to kickstart your OOP journey ahead of an average beginner. Final verdict: recommended.Konstantin Baikov, Nuremberg , Germany

-

Right from the start of teaching myself Python, I was wishing for some learning material that was more like mentoring and less like instructions. For me, Pythonic Object-Oriented Programming is just that.Bryan Stutts, Greenwood Village, Colorado, USA

-

This course is by far the most exhaustive OOP course in Python I've taken... If you're interested in learning about OOP in Python, and even if you think you know everything there is, I highly recommend taking this course.Hana Khan, Santa Clara, California, USA

-

The course is a great introduction to object-oriented programming in Python. I was pleased with your emphasis on the Single Responsibility Principle and Liskov Substitution Principles - adherence to these two guiding principles definitely leads to more robust, testable solutions... There is a need for this course out there.Michael Moch, Sachse, Texas, USA

-

Previous Python courses I took teach OOP in a 1 dimensional way... the truth is, in Python you are free to do OOP the way you like. The instructor teaches you this freedom while also teaching you to be responsible about it.Hassen Ben Tanfous, Hammam Chott, Tunisia

-

You have assembled a really interesting, in-depth and useful course with what I now know to be your trademark qualities of enthusiasm, attention to detail, clarity and erudition.Tony Holdroyd, Gravesend, United Kingdom

-

I felt this course is much needed to really understand the power of Python as an object oriented programming language. I read a lot about Python but didn't find any such course where you learn some really interesting concepts with such ease.Kapil Gupta, New Delhi, India

-

This course is awesome! The instructor has the unique ability to make abstract (and difficult) concepts so understandable... I highly recommend this course to anyone who wants to not only use OOP, but also to understand what goes on under the hood and makes a powerful Python pattern.John Tekdek, Milton Keynes, England

-

These labs are one of the best learning experiences I've ever encountered for programming. Other courses and books feel like parroting or copying code. The instructor gives you creative space to practice developing and problem solving.Chris Lesage, Montreal, Quebec Canada

-

Even the structure of the labs provided helps me to see better what it is to be "thinking like a programmer".Bryan Stutts, Greenwood Village, Colorado, USA

-

The lectures and labs are clear and to the point - EXCELLENT instructor.Mike Clapper, Norman, Oklahoma, USA

-

Your dataclasses video was AWESOME, and I'm saying that after watching Raymond Hettinger's video on dataclasses.Konstantin Baikov, Nuremberg, Germany

-

This course is by far the most exhaustive OOP course in Python I've taken... If you're interested in learning about OOP in Python, and even if you think you know everything there is, I highly recommend taking this course.Hana Khan, Santa Clara, California, USA

-

Final verdict: recommended.Konstantin Baikov, Nuremberg, Germany

Test-Driven Python

-

This is a GREAT course. The videos are well-paced, clear and concise, and yet thorough in the material they cover.Michael Moch, Texas, USA

-

After you take this course, you'll be confidently doing test-driven development like a pro!Hana Khan, California, USA

-

I just completed the full course and it's awesome... I wish you'll continue doing such courses and help us increasing our Python knowledge.Kapil Gupta, Delhi, India

-

You will gain valuable new skills that will demonstrably lighten the load, and relieve unnecessary burden from your programming process. Improve your mental state and get healthy with Test-Driven Python.Bryan Stutts, Colorado, USA

-

As with all courses from Powerful Python, it clearly sets the problem you are solving and guides you step by step to your first "Aha" moments. The videos are detailed and in-depth enough to warrant a second re-watch after you have the main concepts settled.Konstantin Baikov, Nuernberg, Germany

-

Another thumbs-up! For some time, I was looking for resources useful for people like me, wishing to learn more about Python and not the basics over and over. Teachers like you are a big BIG blessing. THANKS!Javier Novoak, Mexico City

-

The course progression is clean, there isn't any long stretches or missing concepts. Compact and universally applicable concepts, most likely the only Python TDD course and reference you'll need for years.Hassen Ben Tanfous, Tunisia

-

This course covers a lot of ground, not only explaining mechanics and techniques of test driven development... but also delving into strategies.Tipton Cole, Texas, USA

-

This is a very well presented and structured course on unit testing and test-driven development. The instructor goes to great lengths... His obvious enthusiasm and passion is both infectious, and motivating. The practical coding exercises are well written.Tony Holdroyd, Gravesend, UK

-

It's one of the finest courses... covering from the basics, and ensuring you get a full professional hand on having fully tested Python code. The lesson on mocking is WONDERFUL.Kapil Gupta, Delhi, India

-

It's clear that the instructor is passionate... This course thoroughly covers the pros and cons and appropriate use cases for various types of tests so you know exactly when to use what type of test for your code...

Most of all, this course helped me unlock some very powerful abilities of Python that I didn't even know about. After you take this course, you'll be confidently doing test-driven development like a pro!Hana Khan, California, USA -

As someone who has used and practiced TDD for years, I highly recommend it to anyone starting down the road to TDD mastery. If you're experienced developer familiar with nUnit testing but adopting Python as your new language, I also recommend this course.Michael Moch, Texas, USA

-

The smooth presentation and progressive exercises helped to cement these concepts for me... The functionality around decorators is particularly well presented - taking one from basic to advanced usage.Asif Zubair, Memphis

-

Once again, Thank you for giving me a superpower :) Unit testing gives me the discipline to write code, and the reward I am getting is highly valuable. All of the course is greatly structured... Labs are designed perfectly.Shankar Jha, Bangalore, India

-

The course is excellent value for money, especially with the bonuses. Recommended.Tony Holdroyd, Gravesend, UK.

Scaling Python With Generators

-

The instructor does an excellent job of explaining this compelling and often confusing feature of Python. The leisurely pace of the course makes it easy to follow. I now feel confident in using generators to scale my code. Thanks!John Tekdek, Milton Keynes, England

-

I am completely amazed by the coroutine concept. I did not read ANYTHING like this about generators ANYWHERE ELSE!Shankar Jha, Bangalore, India

-

An EXCELLENT course... explaining a variety of concepts and techniques, including the concepts and uses of coroutines, iterators and comprehensions. Thoroughly recommended!Tony Holdroyd, Gravesend, UK

-

Another excellent Python class from an excellent instructor.Mike Clapper, Oklahoma, USA

-

The course went deep into Python GEMS... Every time I go over a course by this instructor, I learn a lot. Which very much helps me in my day to day Python development.Kapil Gupta, Gurgaon, India

-

This wonderful course... Greatly structured... Good for advanced programmers who want to level up their skills.Shankar Jha, Bangalore, India

-

Clearly illustrates concepts in Python scalability... Clear lectures, meaningful lab exercises... students will gain invaluable insight into Python internals.Mike Clapper, Oklahoma, USA

-

Once again, Thank you for making me a seasoned Python developer :)Shankar Jha, Bangalore, India

-

The course is excellent value for money, especially with the bonuses. Recommended.Tony Holdroyd, Gravesend, UK.

Next-Level Python

-

This course was the f*cking best training I have ever taken. You rock.Abdul Salam, Lahore

-

Next-Level-Python provides you an opportunity to learn at a deep level...

The [top secret] section is a great example of not just being taught to use a tool, but is used by this amazing teacher to further my understanding of the way Python ITSELF is designed...Bryan Stutts, Colorado, USA -

I took your other courses also. But personally, I think this one is one of the best video lectures I have ever seen in terms of video as well as in the coding exercises....

All modules are structured properly and the way you break down each and every topic was very good...

It's a great course and you are really providing the rare content which is more focused on becoming a great developer. Thank you for making me a seasoned Python developer :)Shankar Jha, Bangalore -

This course bootstraps a programmer with a good general knowledge of Python to a higher level of understanding, appreciation, and skill.

The instructor is a methodical, erudite, patient and highly focused teacher who goes to great lengths to explicate and get into all the nooks and crannies of his subject matter.

By the end of the course, if followed assiduously, you will certainly have raised your Python game.

By the end (assuming you have completed all the labs) you will certainly find yourself far more knowledgeable about Python.

This course is excellent value, and highly recommended.Tony Holdroyd, Gravesend, UK -

Final verdict: recommended.Konstantin Baikov, Nuremberg, Germany

The Powerful Python Book

-

Tim Rand, Chicago, IL, USA, Director of Data Analytics

I'd like to review Powerful Python. It's a very well written, concise, focused book that will improve intermediate to advanced Python users' abilities. I learned how to code on my own in grad school, to analyze my own data. And along the way I started to realize that there were some holes in my understanding of topics like generators, iterators, decorators, magic methods. And around that time, I encountered Powerful Python. And I was impressed by how much overlap there was between the topics covered in the book and the topics that I was already interested in learning more about. After reading the book, I feel a lot more confident in my understanding of those topics. And just as an example, I was able to find functionality that was missing in an an open source project. And open the source code, read through it, understand what was going on. Leverage testing, logging, and debugging in order to add more code, more functionality to the project. And it's a very fun feeling to be able to be able to take on something complex like that. Going into someone else's fairly large code repo, break it down, focus on the right areas, understand the way that they're using their classes and built on top of what's already there. I think that's a lot more challenging at least for me than writing code from scratch in a like, clean slate environment where you can just do whatever you want. I owe a lot of that success, that understanding to Powerful Python. And the topics that I learn more about from that book. -

Josh Dingus, Fort Wayne, USA, Programmer/Analyst

Hi everyone. I'm Josh. I'm here to share my experience with the amazing book called Powerful Python. This book is packed with valuable insights, tips and tricks that have really taken my Python programming skills to the next level. One of the things I'm most excited about is the way Powerful Python goes beyond the basics to teach advanced programming techniques. It covers topics like decorators, context managers, and list comprehensions, in a way that's easy to understand... Even if you're new to Python. It also taught me how to write cleaner, more efficient code by using Python's built-in functions and libraries. No longer reinventing the wheel, or spending hours debugging messy code. The author uses real-world examples, practical exercises, and step-by-step guidance to make even the most complex topics easy to grasp. Plus the conversational writing style makes it feel like you're learning from a friend rather than a boring textbook. I also appreciate that the book is organized in a way that lets you jump to specific topics, or read it cover to cover. This flexibility allowed me to tailor my learning experience to my own needs and interests. Since reading Powerful Python, I've seen some impressive results in my programming projects. My code is cleaner, more efficient, and easier to maintain - which has saved me time and frustration of debugging poorly written code. I've also found myself tackling more complex projects with confidence, knowing that I have the tools and techniques that I need to succeed. All in all, this book has truly helped me level up my Python coding game. If you're on the fence about reading Powerful Python, let me tell you it is worth it. Whether you're a beginner looking into deepen your understanding, or an experienced programmer seeking to sharpen your skills. This book has something for everyone. In a world where Python is becoming increasingly important, investing in your programming skills with Powerful Python is a smart choice. Trust me, your future self will thank you. -

Gil Ben-David, Bat-Yam, Israel, Data Scientist

Hey. My name is Gil. And I'm talking to you about how reading Powerful Python has boosted my career. First of all, I'd like to tell you that I'm a developer for several years with a background of C, C++, and .NET. And when I started to learn Python, it was super fluent and really easy. great for scripting and just open with a server. And I really thought that I know everything to know about the language. But after I read the Powerful Python book, I really changed my mind. And I realized that there's so much to learn. And the book really give you the most super cool concepts that change Python than other languages, basically. And what was amazing in the book was beside their super cool concepts like generators, decorators, and even teach you the most basic thing. Like how to write this properly, and how even to log. How to logging. it was really amazing. And of course the writing was super fluent, clear, and concise. And I really recommend you to to open it. Either you are a beginner or a intermediate, or a professional Python developer, I'm sure everybody has something to learn from this book. And I'll tell you after I ended the book and start to implement its concepts in my day to day work, first of all I got promoted after a couple of months. And I really like saw that my code was much, much better. More organized and I got really more confident about coding. My team saw it, my manager saw it. And I got promoted from that. And now when I look at my old Python script, I get cringe. nd it's really a nice feeling to know how much I boosted from this book. And so if you're on the fence... You don't know, not sure if you want to read it, or try something else, I really recommend you. You'll FOR SURE get something good from the book that will really excel your abilities. -

David Izada Rodriguez, Senior Software Engineer, Florida, USA

Hello. I would like to speak about the book Powerful Python. Our company, a private company, is interested in creating courses for data science. And we decided ot try to teach only the basic Python required for explaining the foundations of the designs, and leave all the deep knowledge about Python to other courses. And when we were involved writing what to indicate to our students, we found about this book, Powerful Python I really like the book. Mainly about the iterators, decorators, test-driven development, logging and environments. Virtual environments. Missing is we have had a lot of courses, some of them use a specific packages. And sometimes, they are not compatible with the latest version of Python. While in other cases, you can use any version, so all of these is interesting. It was interesting for us, as developers. Because we provide libraries for our customers. But we just put in our page page that they should go to Powerful Python to acquire that book and take care of learning why we did what we did in that implementation. Maybe in the future, we will do something between what we are offering now and what is Powerful Python. We don't want to repeat all that job is really wonderful, but our customer just need the information to understand data science. So all these details are more for developers. Professional developers than student of data science. I'm really grateful that I discovered this package. Thank you! -

Gary Delp, Principal Engineer, Rochester MN, USA

Hi, I'm Gary Delp. Just a simple engineer from Minnesota. And I'd like to talk to you about something that has changed the way I think about code. And you know, I've been thinking about code for six decades or more. It's the book Powerful Python. It gives you... It guides you into thinking about coding and data and solving problems from a different point of view. One thing is that it's a short book. It doesn't have all of the details. You can go to docs.python.com - er, .org - and get right to the actual production code. And you can look in details and find out about that. But the author has curated a set of topics, that lead you into discovering for yourself. And when you discover things for yourself, you learn them; you know them; and you can use them. so some of the things are comprehensions, which is a way to clearly and easily structure new data streams. And because there are iterators in there, the code doesn't have to run unless you're going to use the data. Because the iterator takes it all the way back to the start. And you don't have to do it. A big deal about what Powerful Python teaches you, is how to stand on the shoulders of those who have gone before. Using decorators, using the libraries, understanding how they go together for just long enough to internalize how to use them. So. I really want to commend Powerful Python to your consideration. But Only if you're gonna take the book and use it as a guide to take you through improvements. To take you through mind changing paradigms. It's just wonderful. So it's not just what's in the book Powerful Python, but it's the way that it's presented that makes it particularly valuable. So if you want to decorate your life, improve your life, get the book. Use the book. Become changed, and it sounds like i'm overselling it here. .. But It works. It works. So fair winds, and a following sea. -

Nitin Gupta, Nashville, USA, Data Engineer

Hi, this is Nitin Gupta. And today I'm going to talk about my Python learning, you know journey. And what was my experience, what all the challenges I went through. And how I like, you know, tried to overcome those challenges. So just a quick background on my side, so I'm a like a SQL and like a data-side legacy sequel and are like a data guy. Mostly working on SQL Server and then, you know on the ETL tools. Various ETL tools. And certainly like you know because of those cloud adoption and digital transformation. I was moved to another project, so initially part was on SQL Server. But like and most of the things were like on the Python, and some - Those processing and like building the data pipelines and all those things. So as a lead guy for that project, I had to like you know go and get more insight on mastering into the Python. And since it was new for me and with like, you know, complex project I was facing lot of challenges and difficulty. So I started looking around a lot of stuff, to just get a good insight into the Python and started looking at the stuff around the internet. And then subscribed for some of them, online learning courses like Udemy and other courses. And that give just an initial or whatever you think. But I would say like know for my project requirement or like you know, more like a practical "use it" perspective, it still there was not sufficient and I was still missing something. And for that purpose, I started looking some more you know and searching around like of what I can use. And in that such I found like Powerful Python. And I subscribe to the book. Powerful Python book. And I went through it, the different courses and different chapters. And it was - I was surprised that it has helped me a lot. Mainly like, you know, some of the core concept and then into the object oriented and advanced Python concept like generator functions and like a decorater and other stuff. And the more I started looking into it, I was getting on more like you know a new insight, in-depth knowledge into the Python thing. And how I can use it. So more like a practical oriented from the application side. I was getting you know a good insight or help from that material. And I really appreciate and thank to have Powerful Python for you know sharing those content and making, you know, my journey so easy and smooth. Because some of the folks who are just new to the Python, and they have to work on a project, which is practical project. Getting yourself comfortable and getting that level of mastering or comfort on the skill, is very difficult unless you have the right tool or right material. And I think in that sense, Powerful Python has helped me a lot. And I still follow whatever their courses, boot camps, or any materials what is available from Powerful Python and whenever anything new, webinars, and I subscribe and follow those materials. They help me a lot in my learning. And you know becoming a better powerful - and, you know, a better developer on Powerful Python. Thank you. -

Johnny Miller, Laramie, Wyoming, USA, Business Owner

Hi my name's Johnny. And I was I am from Wyoming. I run a handyman business, and I'm a self taught programmer. Still new in it. I'm working on building a flask app for just like simple employee time sheets and stuff. As well as basic understanding of web sites and that sort of stuff, for doing my own future web sites as I go. I just became - I just got done with my bachelor's degree in accounting, and that's the career I'm pursuing now. And in all this, programming has just a bunch of advantages for me that I can see. And I've done a bunch of different things out there. But I purchased the Powerful Python book. And it's really helped me too "get" a bunch of these ideas that I've read, and practice things in other places to really cement as I read through the book, before and after I've done these. I have it on my phone, have it on my two different computers, and it's just a great resource for me. And I don't remember - I've had it for while now, so I don't remember what I paid, but I have been very happy with it. And it's just been an asset that I use over and over. And I would definitely recommend it to people. Having the digital copy is great, and the resources that I've received from Powerful Python have been really good at breaking it down. Being intuitive, and helping me like I said get that - a better understanding every time I read through it, especially after projects I've been working on, and just kind of follow and in figuring out not quite knowing what's going on underneath the surface. Which I feel that that's what it's helped me to do. So, I would definitely recommend. I'm very happy and I continue to follow the stuff that's put out by them. And I just wanted to say those few things. Thank you. -

Michael Lesselyoung, Madison, South Dakota, USA, Owner of CSI (Computer Systems Integration)

If you're tired of searching books, videos, courses, and endlessly starting with "hello world" examples, or skipping over chapters just to find some nuggets... Powerful Python is what you've been missing. Read the book and learn REAL programming skills that you won't get anywhere else. If you code the examples in the book, you'll get an understanding of Python that others seek out for for years to obtain. And some never reach that level. The explanations for the examples are not only thorough, but also tell you what not to do, and why. I've never found an error and any of the examples. They've all been tested and work as described. So you're not your wasting your time trying to figure out what went wrong, or why something doesn't work. Powerful Python has the ability to give you deep understanding that not only gives you all the tools to be able to utilize the power of Python, but the psychological methods of learning that makes learning Python successful and build long lasting principles. I highly recommend the Powerful Python book. -

Todd Rutherford, Sacramento, CA, USA, Data Engineer

I would like to speak about Powerful Python, and a way - how it has changed my outlook and my thinking on when I approach certain programming issues. Especially when being as a data engineer, moving data around the using different type of decorators, exception handling, and errors that I come across using Powerful Python allowed me to really demonstrate that skillset, and understanding how powerful Python can actually be. And the book actually having examples and walk-throughs as well, and just the understanding of what other functions are out there that can be utilized. What other techniques that are out there that you may not get in the normal organization or developer or engineering role. And Powerful Python allowed me to really scale my coding and in a way that has made my life a lot more easier. And my day to day work allows me to automate my life a lot easier. Understanding you know classes and objects beyond just the basics. I've taking classes in Python, doing like data science, I've read books from Springer on data structures and algorithms. And that has never come as close to where I was able to fully understand... Just with generators alone has been allowing me to really be able to scale. And my code and my programs are now where I'm able to process data in larger amounts other than breaking everything down. And really using like sharding techniques, able to create a lot of collections. Comprehensions. List comprehensions. A lot more easier. Multiple sources and filters comprehension. And I think you can't go wrong for Powerful Python. As any other program or course that's out there, because it allows you to really change your way of thinking. In your mind thinking. And that so - that's where you want to be. And that's where I wanted to be as a programmer. It was really just getting to my - getting a senior level thinking, ea senior level developer's thinking, and how to efficiently write Python. I think you can't go wrong. It's great. It's comprehensive. It's not a whole lot - it's not a five hundred page book. it's not a seven hundred page book. I recommend Powerful Python for sure. I recommend also doing any of the training courses as well, because the coding examples that you get, the solutions, walk-throughs as you'll see, and you'll just see a new level of interest grow. Instead of just being an introductory level of Python, you get into more an advanced level of Python. Which you want to be. You want to be able to write your own programs, your modules, be able to debug your own code, and be able to be to a staff level engineer. And that's what one one on my biggest goals was to be a staff engineer, and moved more into machine learning. And engineering as well. And AI.

-

Your book is absolutely amazing and your ability to articulate clear explanations for advanced concepts is unlike any I have ever seen in any Programming book. Thanks again for writing such a good and thought provoking book.Armando Someillan

-

Your book is a must have and must read for every Python developer.Jorge Carlos Franco, Italy

-

Feels like Neo learning jujitsu in the Matrix.John Beauford

-

Powerful Python is already helping me get huge optimization gains. When's the next edition coming out?Timothy Dobbins

-

It's direct. Goes right for a couple of subjects that have real-world relevance.Chuck Staples, Maine, USA

-

A lot of advanced and useful Python features condensed in a single book.Giampaolo Mancini, Italy

-

I just wanted to let you know what an excellent book this is. I'm a self taught developer and after having done badly at some interviews I decided to buy your book. It covers so many of the interview questions I'd got wrong previously... I keep going back to your book to learn python. I've actually recommended it as an interview guide to some of my friends.Fahad Qazi, London, UK

-

Thanks. Keep up the good work. Your chapter on decorators is the best I have seen on that topic.Leon Tietz, Minnesota, USA

-

What have I found good and valuable about the book so far? Everything honestly. The clear explanations, solid code examples have really helped me advance as a Python coder. Thank you! It has really helped me grasp some advanced concepts that I felt were beyond my abilities.Nick S., Colorado, USA

-

Only a couple chapters in so far but already loving the book. Generators are a game changer.Ben Randerson, Aberdeen, UK

-

I'm finding your book very insightful and useful and am very much looking forward to the 3rd edition. I'm one of those who struggled with decorators prior to reading your book, but I have greatly benefited from your rich examples and your extremely clear explanations. Yours is one of the few books I keep on my desk.I'm finding your book very insightful and useful... I have greatly benefited from your rich examples and your extremely clear explanations. Yours is one of the few books I keep on my desk.Tony Holdroyd, Gravesend, UK

-

LOVING Powerful Python so far, BTW. I started teaching myself Python a while ago and I totally dug the concept of generators. It reminded me of Unix pipes -- I started using Unix in the 70's -- and the generators basically let you construct "pipelines" of functions. Very cool, much better way to express the concept, awesome for scalability. But I was a bit hazy on some of the details. PP clarified that for me, and several other features too. And I'm only up to chapter 3. :-)Gary Fritz, Fort Collins, CO, USA.

-

Thank you for your book. It is packed with great information, and even better that information is presented in an easy to understand manner. It took approximately a month to read from cover to cover, and I have already begun implementing the vast majority of the subjects you covered! I gave your book five stars.Jon Macpherson

-

This is among the best books available for taking your Python skills to the next level. I have read many books on Python programming in a quest to find intermediate level instruction. I feel there are very few books which offer the sort of insights needed to really improve skills. This is one of the few I can highly recommend for those who are struggling to achieve intermediate skill in Python. The author clearly has a mastery of the topic and has an ability to convey it in and understandable way.Darrell Fee, USA

-

Just what I needed. Very much applicable to where I'm at with Python today and taking my coding to the next level. I got a ton of insight with this book. It really brought to light how magic methods can be used to develop things like Pandas. It opened my eyes to how awesome decorators can be! The classes section, logging, and testing were awesome. I need to go back over the book again and work thru some of the techniques that are talked about so I can get used to using them every day. I currently do a lot of implementation work with data and SQL Server. Anything outside of SQL that I do, I do in Python. A problem for me has been that I get so many odd type jobs that my code is pretty spread out across a broad range of functionality. I've been wanting to stream line it, and write my code in a way that it can scale up. At my job, I'm writing, testing, implementing, and maintaining my own Python code. I don't get code reviews, and so it's hard to know if I'm writing good code or not cause I'm not getting that critique from my betters. I think you have definitely given me some broad ideas on being able to scale, and some things that I should strive for in my code.Josiah Allen, USA

-

This book taught me a great deal and was enjoyable to read. I have been developing with Python for 5-6 years and considered myself well versed in the language. This book taught me a great deal and was enjoyable to read. The complex patterns are explained well with enough detail to understand but not too much to confuse the reader. After having the book for one day I recommended it to my team and will make it required reading for our interns and junior devs. Note: I purchased this book and did not receive anything for this review.Aaron (not the author), USA

-

Great Book. By far one of the best books out there. No nonsense, just great information.Edward Finstein, United States

-

Great coverage not offered in other books.Tony, United States

-

Amazing work! I wish all technical books could be this good. As an instructor and researcher, I read dozens a year and this is pure platinum. Thanks, Aaron!Adam Breindel, United States

-

Truly next-level Python. Concise to the point and practical. Will take your python to the next level.Amado, United States

-

Great Python book. One of my favorite Python books that show great examples on using the language effectively.Bryant Biggs, United States

-

Great book. A lot of great tips for those beyond a beginner.Jeff Schafer, United States

-

Five Stars. Awesome book, helpful in moving beyond basic coding.Mark Vallarino, USA

-

Instant productivity gains. These are the best pages you will find on Python if you're an experienced programmer looking to make a leap in your python skills. The entire book takes only a few days to read, and it's very enjoyable. I'm already seeing the rewards in my code as I have shifted from writing simple scripts with 1-2 normal functions to writing a unit-tested class which creates its own decorated functions at runtime (data-driven), overloads operators, and properly handles and throws exceptions. Nowhere else have I found so many of python's unique features explained so clearly and succinctly as I have in Powerful Python.Carolyn R., USA

-

A great attribute of this book are the practical examples. I've been programming with Python for about 1.5 years and this book is right up my alley. A great attribute of this book are the practical examples, and comments on Python 2 vs 3 syntax changes. Aaron describes things in great detail so implementing his ideas is very natural.Mike Shumko, USA

-

Great book, great lecturer. Took a session with Aaron as OSCON recently - very hands on, great instructor. He handed out his book to all of us - it has a lot of useful examples and gives some great tips on how to refine your Python skills and bring your coding to the next level. If you want to move from beginner python and go to intermediate/advanced topics, this book is perfect for you.Gary Colello, USA

-

This book has a lot of useful examples and gives some great tips on how to refine your Python skills and bring your coding to the next level. If you want to move from beginner Python and go to intermediate/advanced topics, this book is perfect for you.Anonymous reviewer

-

Takes your Python coding skills from average to awesome. This man knows what he's doing. I have been reading his online stuff and have taken classes with him. And now this book. OH, THIS BOOK! He makes quick work of some advanced and esoteric topics. If you want to be a Python programmer a cut above everyone else, and ace the tough topics in interviews, this is the one for you.Hugh Reader, USA

-

Five Stars. I've studied this deeply, the author has been a big contributor to my lifetime development in software engineering.Refun, USA

-

Take your Python skills to the next level. Looking to go beyond the basics, to broaden and deepen your Python skills? This is the book for you. Highly recommended!Michael Herman, USA

-

Move to this book if you already master the basics. There are very few books that can bridge the gap between basics and advanced level and this is one of them. If you already feel comfortable with lists, dictionaries and all the basics, this book will take you to the next level. However, I would recommend reading this book AND using your python interpreter to repeat all the examples in the book and lastly make some notes like writing down a code snippet so you can always remember and don't need to read it all over again.Rodrigo Albuquerque, UK

-

I think it's a pretty fine book.Chip Warden, Kansas City, USA

-

If you have enough basics of Python, this should be you next read. This is really good for intermediate Python programmers, who have mastered the basics and are looking for new ideas on various new modules and features out in Python. Also the author explains few gotchas and secrets from the woods to make you a sound Python pro.Andrew, UK

-

Excellent book. A great book that will help in real world production-level programming. I found the explanation of some complex topics very easy to understand. An example of this are the chapters on decorators and testing. I would recommend this book to anybody who already knows Python and wants to take it to the next level. While they are other books in the market that have more detail, the selling point of this book is that it is simple yet highly effective. The author shares a lot of best practices used in the real world which I've noticed that a lot of books leave out.Raul, Canada

-

Advanced Python! Great book, Python finally explained in an advanced and in-depth way, is not suitable for beginners. You need to have some basics.Alex, Italy

-

Five Stars. This is really good book.Marcin Adamowicz, UK

-

Great book. Warmly recommended to all Pythonistas.Giovanni, UK

-

This book is exactly what I'm looking for. I found a few code examples on my Facebook page yesterday and it fires up my passion to code immediately. Today, I browsed the chapter index and just finished reading "Advanced Functions". It's exactly what I need. It skips the basic syntax and focuses on the areas where separate top developers from the rest. In fact, there aren't many topics in these core areas. However, this book will help both engineers and EMs think in Python cleverly when attacking all kinds of DevOps problems.Shan Jing, Los Angeles, CA